malloc源码解析

malloc源码解析

- glibc2.35

内部结构

heap

/* A heap is a single contiguous memory region holding (coalesceable) |

chunk

struct malloc_chunk { |

- allocated chunk

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

- free chunk

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ |

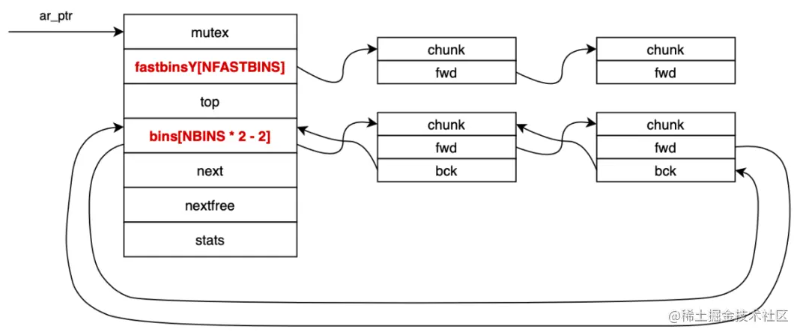

arena结构

static struct malloc_state main_arena = |

malloc中的系统操作

sysmalloc_mmap

static void * |

sysmalloc

- 先使用sysmalloc_mmap扩展

- 扩展当前堆,如果不是main_arena,如果当前堆不够,直接新分配一个堆;如果是main_arena,先brk申请内存,失败使用mmap再申请

- 对sbrk申请的内存与之前的内存进行可用的合并

- 处理两次sbrk不连续:如果这不是第一次进行堆扩展,则需要在

old_top处插入两个”double fencepost”。这些”double fencepost”是人工创建的块头,用于防止与未分配的空间合并。它们被标记为已分配,但实际上是太小而无法使用的块。为了使尺寸和对齐方式正确,需要插入两个”fencepost”。然后,根据需要释放剩余的空间(_int_free)。

- 处理两次sbrk不连续:如果这不是第一次进行堆扩展,则需要在

- 最后,进行内存的分配

static void * |

systrim

通过释放顶部块之后的额外内存来减少内存的使用量

调用MORECORE函数释放额外的内存。忽略其返回值,再次调用MORECORE函数以获取新的堆内存结束位置。

static int |

arena操作

_int_new_arena

- 创建一个新的堆。并初始化内存管理信息

static mstate |

获取arena

- free-list是一个arena全局链表

static mstate |

heap_trim

- 计算需要释放的额外内存大小(extra),以页大小为单位,并向下对齐到最近的页边界。

- 如果额外内存大小为0,则无需进行修剪,返回状态值0表示修剪操作失败。

- 检查当前堆内存的结束位置是否与上一次设置的结束位置相同。这是为了避免在修剪之前存在外部的sbrk调用导致堆内存位置发生变化。

- 如果可以重新映射堆空间,则使用MMAP函数将额外的堆空间映射为不可访问的内存页。

- 如果无法重新映射堆空间,则使用__madvise函数将额外的堆空间标记为不需要的。

/* Shrink a heap. */ |

内部执行函数

_libc_malloc

- 调用

ptmalloc_init()函数进行内存分配器的初始化。 - 单线程调用

_int_malloc函数在主线程堆(main_arena)中分配内存块,并对返回的指针进行标记(tag_new_usable);如果是多线程环境,调用arena_get函数获取一个可用的内存堆(arena) - 调用

arena_get_retry函数再次获取可用的内存堆,并调用_int_malloc函数进行分配。

void * |

_libc_free

- 将内存指针

mem转换为对应的内存块指针p。 - 如果内存块是通过内存映射(mmapped)方式分配的,则调用

munmap_chunk函数释放该内存块。- 如果需要,会调整动态的堆扩展和内存映射的阈值。

- 如果内存块不是通过内存映射方式分配的,则进行以下操作:

- 初始化线程缓存(Thread Cache,TCACHE)。

- 将内存块标记为属于库(tag_region)。

- 获取内存块所属的内存堆(arena)。

- 调用

_int_free函数释放内存块。

|

_int_malloc

如果此时没有arena,直接调用sysmalloc

如果chunk的大小 < max_fast,在fast bins上查找适合的chunk;如果不存在,转到5

4、如果chunk大小 < 512B,从small bins上去查找chunk,如果存在,分配结束

5、需要分配的是一块大的内存,或者small bins中找不到chunk:

- 遍历fast bins,合并相邻的chunk,并链接到unsorted bin中

- 遍历unsorted bin中的chunk:

- 能够切割chunk直接分配,分配结束

- 根据chunk的空间大小将其放入small bins或是large bins中,遍历完成后,转到6

6、需要分配的是一块大的内存,或者small bins和unsorted bin中都找不到合适的 chunk,且fast bins和unsorted bin中所有的chunk已清除:

- 从large bins中查找,反向遍历链表,直到找到第一个大小大于待分配的chunk进行切割,余下放入unsorted bin,分配结束

7、检索fast bins和bins没有找到合适的chunk,判断top chunk大小是否满足所需chunk的大小,从top chunk中分配

8、top chunk不能满足需求,需要扩大top chunk:

- 主分区上,如果分配的内存 < 分配阈值(默认128KB),使用brk()分配;如果分配的内存 > 分配阈值,使用mmap分配

- 非主分区上,使用mmap来分配一块内存

_int_free

2、如果free的是空指针,返回

3、如果当前chunk是mmap映射区域映射的内存,调用munmap()释放内存

4、如果chunk与top chunk相邻,直接与top chunk合并,转到8

5、如果chunk的大小 > max_fast,放入unsorted bin,并且检查是否有合并:

- a.没有合并情况则free

- b.有合并情况并且和top chunk相邻,转到8

6、如果chunk的大小 < max_fast,放入fast bin,并且检查是否有合并:

- a.fast bin并没有改变chunk的状态,没有合并情况则free

- b.有合并情况,转到7

7、在fast bin,如果相邻chunk空闲,则将这两个chunk合并,放入unsorted bin。如果合并后的大小 > 64KB,会触发进行fast bins的合并操作,fast bins中的chunk将被遍历合并,合并后的chunk会被放到unsorted bin中。合并后的chunk和top chunk相邻,则会合并到top chunk中,转到8

8、如果top chunk的大小 > mmap收缩阈值(默认为128KB),对于主分配区,会试图归还top chunk中的一部分给操作系统

if ((unsigned long)(size) >= FASTBIN_CONSOLIDATION_THRESHOLD) { |